In the world of computing, qubits and bits represent two fundamental units of information processing that have revolutionized the way we approach complex problem-solving and data manipulation. While bits have been the foundation of classical computing for decades, qubits have emerged as the building blocks of quantum computing, promising unprecedented computational power and advancements in various fields. Understanding the key differences between qubits and bits is essential for grasping the potential of quantum computing and appreciating its distinct advantages over classical computing systems.

Bits: The Foundation of Classical Computing

Bits are the basic units of information in classical computing systems, representing either a 0 or a 1. This binary system forms the basis of all digital data storage and manipulation, with each bit corresponding to a physical state in a computer’s memory or storage system. A bit being in the 0 state signifies the absence of an electric charge, while a bit in the 1 state indicates the presence of an electric charge.

In classical computing, operations are performed on collections of bits, enabling the execution of algorithms, data processing, and various computational tasks. The sequential and deterministic nature of bit-based processing allows for the precise control and predictable outcomes that have powered technological advancements for decades.

Qubits: The Quantum Alternative

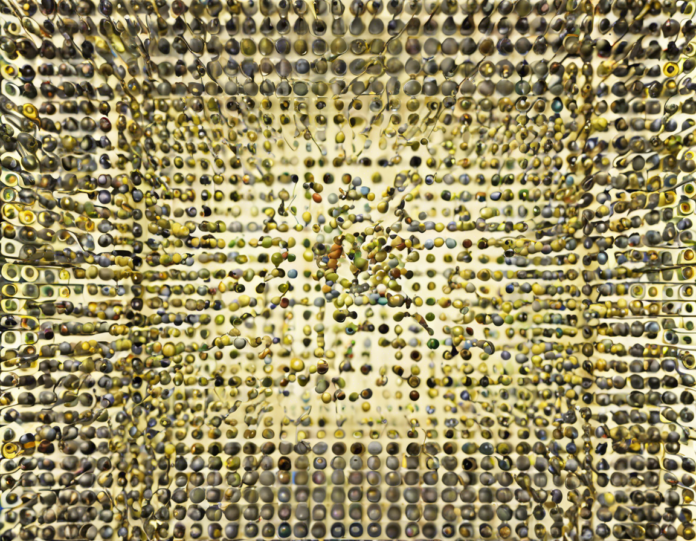

On the other hand, qubits operate under the principles of quantum mechanics, offering a radical departure from the binary nature of bits. A qubit can exist not only in the states of 0 or 1 but also in a superposition of both states simultaneously. This unique property enables qubits to process and store a vast amount of information in parallel, leading to exponential increases in computational efficiency and capability.

Additionally, qubits exhibit another quantum phenomenon known as entanglement, where the state of one qubit is intrinsically linked to the state of another qubit regardless of the physical distance between them. This interconnectedness allows quantum computers to perform complex calculations and solve challenging problems with unparalleled speed and complexity.

Key Differences Between Qubits and Bits

-

Superposition and Entanglement: The ability of qubits to exist in multiple states simultaneously through superposition and exhibit entanglement sets them apart from classical bits and enables quantum computers to perform parallel computations and solve problems at a much faster rate.

-

Quantum Uncertainty: Unlike classical bits that have deterministic states, qubits introduce an element of uncertainty due to their quantum nature, making quantum computing probabilistic rather than purely deterministic.

-

Computational Power: Quantum computers with qubits have the potential to solve complex problems exponentially faster than classical computers, particularly in areas such as cryptography, materials science, optimization, and artificial intelligence.

-

Error Correction: Bits in classical computing systems are relatively stable and less prone to errors, while qubits are sensitive to environmental noise and require sophisticated error correction techniques to ensure the accuracy of computations.

-

Physical Implementation: Classical bits are typically realized using electronic circuits with transistors, while qubits can be implemented using various physical systems such as superconducting loops, trapped ions, or semiconductor-based quantum dots.

Challenges in Quantum Computing

While the potential of quantum computing is immense, several challenges need to be overcome to harness its power effectively. Some of the key challenges include:

-

Decoherence: The fragile nature of qubits makes them susceptible to decoherence, where external factors disrupt their quantum state and lead to errors in computations.

-

Scalability: Building large-scale quantum computers with hundreds or thousands of qubits while maintaining their coherence and connectivity presents a significant engineering challenge.

-

Error Correction: Developing robust error correction protocols to rectify qubit errors and enhance the reliability of quantum computations is crucial for the practical implementation of quantum algorithms.

-

Hardware Limitations: The physical requirements of quantum systems, such as ultra-low temperatures and isolation from external interference, pose limitations on the scalability and accessibility of quantum computers.

Applications of Quantum Computing

Despite the challenges, quantum computing holds tremendous promise for a wide range of applications that could transform industries and scientific research. Some notable applications of quantum computing include:

-

Cryptography: Quantum computers have the potential to break traditional cryptographic algorithms, leading to the development of quantum-safe encryption methods to secure sensitive data.

-

Drug Discovery: Quantum algorithms can simulate molecular interactions with high accuracy, accelerating the discovery of new drugs and optimizing drug development processes.

-

Optimization Problems: Quantum computers excel at solving optimization problems across various domains, such as logistics, supply chain management, and financial modeling, leading to more efficient resource allocation and decision-making.

-

Machine Learning: Quantum machine learning algorithms can enhance pattern recognition, optimization tasks, and data analysis, paving the way for advancements in artificial intelligence.

-

Material Science: Quantum computing enables the simulation of complex materials and molecules, leading to the discovery of novel materials with unique properties for applications in energy, electronics, and more.

Frequently Asked Questions (FAQs)

-

What is the difference between qubits and classical bits?

Answer: Qubits can exist in superposition states of 0 and 1 simultaneously, while classical bits are binary and can only be in either 0 or 1 state. -

How does entanglement differentiate qubits from classical bits?

Answer: Entanglement allows qubits to be interconnected regardless of distance, enabling quantum systems to perform parallel computations and exhibit unique properties. -

What are the challenges in implementing quantum computing?

Answer: Challenges include decoherence, scalability of qubit systems, error correction, and the physical engineering requirements of quantum computers. -

In which areas can quantum computing offer significant advantages over classical systems?

Answer: Quantum computing excels in cryptography, drug discovery, optimization problems, machine learning, and material science due to its ability to process complex data and solve intricate problems efficiently. -

How do quantum computers handle errors in computations?

Answer: Quantum error correction protocols are employed to detect and rectify errors in qubit operations, ensuring the reliability and accuracy of quantum computations. -

What are some notable quantum algorithms used in quantum computing?

Answer: Algorithms such as Shor’s algorithm for integer factorization, Grover’s algorithm for searching databases, and the Quantum Approximate Optimization Algorithm (QAOA) are examples of quantum algorithms with significant applications in various domains.

In conclusion, the differences between qubits and bits represent a fundamental shift in the computational paradigm, offering the potential for exponential advancements in technology, science, and innovation. Quantum computing’s ability to leverage the principles of quantum mechanics to perform complex computations with unprecedented speed and efficiency heralds a new era of possibilities that could reshape industries and society as we know it. By understanding and harnessing the unique properties of qubits, researchers and developers are paving the way for a quantum revolution that promises to unlock new frontiers in computing and usher in a future of limitless possibilities.